stanford_headlinez / Week 3 / due 2018-01-23 23:59¶

This assignment basically asks you to implement the world’s worst web-scraper. If you know anything about the web or HTML or data deserialization, this will annoy the heck out of you. But if you’re relatively new to these concepts, then this somewhat contrived lesson not only gives you a taste of what “web-scraping” is all about, but also the core concept of how data is extracted from structured text.

- Description

- Requirements

- Expected files

- Expected behavior and results

- Test A. The function fetch_html() accepts a URL as argument and returns a string

- Test B. The function extract_headline_text() takes a string with a specified pattern and returns a headline

- Test C. The function parse_headline_tags() takes a string and returns a list of strings

- Test D. The function print_hedz() accepts a URL as string and prints the Stanford-formatted headlines found on that webpage

- Background and context

- What you should know/learn to do

- Walkthrough

Description¶

Why do I call it the “world’s worst web-scraper”? Because it assumes that you know virtually nothing about the Web, HTTP, or HTML. Later on, you’ll be expected to know what those concepts are, particularly what it means to use a special parser for HTML – such as Beautiful Soup – to efficiently and robustly deserialize values from a webpage.

But this lesson only means to give you the broadest exposure to web-scraping, such as how to download files using Requests, and to realize that HTML is just text, and that there’s more to a web request than getting what you see in the web browser.

This is less a lesson about HTML/web stuff than it is a lesson on better understanding Python functions, and how to break up functionality of a big program into small, separate functions. And also, how text is data.

Overall functionality¶

Your scraper will be visiting the Stanford news website, which can be found at this URL:

However, for the purposes of test consistency (since the Stanford live site always changes), I’ve created two static, unchanging pages to test against:

While the specifics of the headline text may differ, the HTML structure is the same, and the visual structure is pretty much the same:

For this assignment, you’ll be creating a single script named scraper.py with a function named print_hedz().

That print_hedz() function accepts a URL. What it does is print news headlines, if any exist:

>>> import scraper

>>> scraper.print_hedz('https://wgetsnaps.github.io/stanford-edu-news/news/')

Ira M Friedman dead at 69

A window into long-range planning

Starting small on the path to rebuilding our bodies

Friends’ genes may help friends stay in school

Stephen Gallagher appointed university CIO

3-D images of artifacts enrich experience for students, faculty

Innovator, imaging expert Juergen Willmann dies at 45

State marijuana laws at odds with federal enforcement

Skeleton for scraper.py¶

While this assignment consists only of a single script, scraper.py, this script will consist of 4 separate functions:

def print_hedz(url='https://www.stanford.edu/news/'):

"""

url can point to any website, but by default, it points to the

official Stanford News website

This function does not return anything. It just prints to output.

"""

txt = fetch_html(url)

htags = parse_headline_tags(txt)

for t in htags:

hedtxt = extract_headline_text(t)

print(hedtxt)

def extract_headline_text(txt):

"""

The `txt` argument is a string, ostensibly text that looks like what the HTML

is for headlines on Stanford's news site, e.g.

<h3><a href='https://news.stanford.edu/2018/01/1/hello-stanford>Hello Stanford</a></h3>

This function returns a string, e.g. "Hello Stanford"

"""

def parse_headline_tags(txt):

"""

The `txt` argument is a string, ostensibly text that looks like the raw HTML

that can be found at https://wgetsnaps.github.io/stanford-edu-news/news/simple.html

This function returns a list of strings, each string

should look like the raw HTML for a standard Stanford news headline, e.g.

[

'<h3><a href='https://news.stanford.edu/2018/01/1/hello-stanford>Hello Stanford</a></h3>',

'<h3><a href='https://news.stanford.edu/2018/01/1/bye-stanford>Bye Stanford</a></h3>'

]

"""

def fetch_html(url):

"""

The `url` argument should be string, representing a URL for a webpage

This function returns another string -- the raw text (i.e. HTML) found at the given URL

"""

http://www.compciv.org/guides/python/fundamentals/function-definitions/

For this assignment, you will create a single Python script named scraper.py. This script will be composed of several functions. However, for the end user, using your scraper should be as simple as this:

Which, if you were to visit it in your web browser, should look something like this:

Your web scraper should parse the HTML and extract just the news headlines, and then print the text of those headlines to screen, i.e.

.

Requirements¶

This section details the exact requirements I expect from your homework folder, including the existence and correct spelling of filenames, to expected behavior when I run your code. You might as well assume that your code is being run by an automated process, a process that will fail upon even a typo.

In places where you see, SUNETID, e.g.

compciv-2018-SUNETID/week-03/stanford_headlinez/

Replace it with your own SUnet ID. For example, if your SUnet ID is dun:

compciv-2018-dun/week-03/stanford_headlinez/

Expected files¶

Your compciv repo is basically a folder of files, what’s on Github.com is basically the same as what’s on the repo as stored on your own computer. But for the sake of clarity, I explain what things should look like whether I’m visiting your repo on Github.com, or if I’ve cloned your repo onto my own computer.

When I visit your Github.com repo page¶

I expect your Github repo at compciv-2018-SUNETID repo to have the following subfolder:

compciv-2018-SUNETID/week-03/stanford_headlinez/

On this subfolder’s page, I would expect the file tree to look like this:

└── scraper.py

When I clone your Github repo¶

If I were to clone your repo onto my own computer, e.g.

$ git clone https://github.com/GITHUBID/compciv-2018-SUNETID.git

I would expect your homework subfolder to look like this:

compciv-2018-SUNETID/

└── week-03/

└── stanford_headlinez/

└── scraper.py

Expected behavior and results¶

After I’ve cloned your Github repo, assume that I will run the following shell commands and/or Python scripts, and expect the stated output and effects.

For all of these tests, assume that I’m in the homework directory, i.e. I’ve changed into the directory like this:

$ cd compciv-2018-SUNETID/week-03/stanford_headlinez

Test A. The function fetch_html() accepts a URL as argument and returns a string¶

If I run the following Python sequence:

import scraper

url = 'https://compciv.github.io/stash/hello.html'

x = scraper.fetch_html(url)

print(x)

I expect the following output to screen:

<h1>Hello, world!</h1>

Test B. The function extract_headline_text() takes a string with a specified pattern and returns a headline¶

If I run the following Python sequence:

import scraper

hedtxt = """

<h3><a href='https://news.stanford.edu/2018/01/1/hello-stanford>Hello Stanford</a></h3>

"""

hed = scraper.extract_headline_text(hedtxt)

print(hed)

I expect this exact output to my screen:

Hello Stanford

Test C. The function parse_headline_tags() takes a string and returns a list of strings¶

If I run the following Python sequence:

import scraper

rawhtml = """

<html><h1>blah</h1>

<a href="blah"></a>

<h3><a href='https://news.stanford.edu/2018/01/1/hello-stanford>Hello Stanford</a></h3>

<h3><a href='https://news.stanford.edu/2018/01/1/bye-stanford>Bye Stanford</a></h3>

</html>"""

htags = scraper.parse_headline_tags(rawhtml)

print(len(htags))

for h in htags:

print(h)

I expect this exact output to my screen:

2

<h3><a href='https://news.stanford.edu/2018/01/1/hello-stanford>Hello Stanford</a></h3>

<h3><a href='https://news.stanford.edu/2018/01/1/bye-stanford>Bye Stanford</a></h3>

Test D. The function print_hedz() accepts a URL as string and prints the Stanford-formatted headlines found on that webpage¶

If I run the following Python sequence:

import scraper

testurl = 'https://wgetsnaps.github.io/stanford-edu-news/news/simple.html'

scraper.print_hedz(testurl)

I expect to see this print to screen:

A window into long-range planning

3-D images of artifacts enrich experience for students, faculty

What you should know/learn to do¶

- iPython

- how to open iPython

- how to enter (sometimes paste) and run Python code interactively

- how to use the help() and type() functions to inspect objects and results

- Modules

- How to import modules

- Functions

- How to define a function

- How to define arguments for a function

- How to define a default argument for a function

- How to define a return value for a function

- A little bit about variable scope (i.e. what you should name variables inside a function)

- Loops

- What a for loop is

- How to loop through each item in a sequence, e.g. a list of strings

- Conditionals

- What a

ifstatement is - What the

inoperator does - How to test if one string is inside another

- What a

- Strings

- How to

splita string by a symbol or delimiter - How to use

splitlines()

- How to

- Using third party libraries like Requests

- How to use the

importstatement to call in the Requests library - How to use

requests.get(), and what it returns - How to get the text content from a Response object

- How to use the

Getting the homework directory set up¶

Let’s try to create the homework directory using just the command-line, for practice:

(note that there is NOTHING about Git/Github here…)

$ cd Desktop/compciv-2018-SUNETID

$ mkdir week-03

$ mkdir week-03/stanford_headlinez

$ cd week-03/stanford_headlinez

# open up a text editor instance based in this directory

$ code .

Paste in the skeleton for scraper.py¶

You can find a template earlier in the lesson: Skeleton for scraper.py

How to push onto Github using your command-line (Terminal/Shell)¶

Note that these instructions only apply if your Github/git repo is…correctly set up.

How do you know if you are not correctly set up? If you get any kind of error message (and if so, just email me).

Assuming that your compciv homework folder is off of your Desktop folder:

$ cd Desktop/compciv-2018-SUNETID # change into the homework directory, assuming you're in your home directory

$ git add week-03/stanford_headlinez # adds the directory and anything inside the directory

$ git commit -m 'week3'

$ git push # may have to enter in Github username and password

Visit the news page in your web browser¶

Visit one of the test pages in your web browser:

- https://wgetsnaps.github.io/stanford-edu-news/news/simple.html

- https://wgetsnaps.github.io/stanford-edu-news/news/

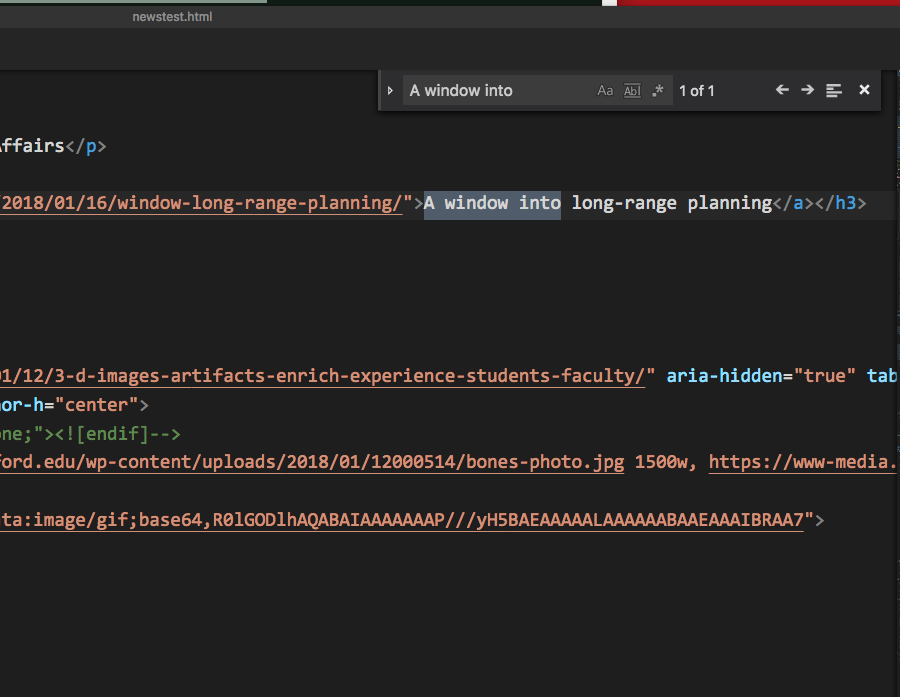

Try these things to see the raw HTML

- Use the Inspect Element in your Chrome browser’s right-click context menu to get a “pretty” view of what the HTML for a news headline looks like (primer here

- Try to view the source of the HTML.

- Use the

curlcommand to download the raw HTML into your Terminal/Shell - Download the webpage as a file and open it using your code editor

In your text editor, use the text-finding tools (Command/Ctrl-F) to search for where the human-readable headlines are in the HTML:

Walkthrough¶

Old-fashioned algorithm¶

As with every coding problem, if you don’t know where to start, always think about how you would do it the old-fashioned way.

To keep things simple, let’s use this simple snapshot of the Stanford News page, in which there are only 2 headlines:

https://wgetsnaps.github.io/stanford-edu-news/news/simple.html

How would you solve this problem as a human? Here’s one way to describe it:

I visit the URL at https://wgetsnaps.github.io/stanford-edu-news/news/simple.html

I wait for the contents of the webpage to load up

I look for the text that looks like the kind of headlines we are expected to read

For each of those headlines

- I type out the text of the headline, e.g. ‘A window into long-range planning’

Breaking it down for Python¶

The reason why this problem asks you to write 3 functions – all of which are called by a 4th function – is to get you in the habit of breaking every problem down into separate, sometimes ridiculously simple tasks.

You can review the functions here: Skeleton for scraper.py

You’ve basically been given the print_hedz function. If you define the other 3 functions exactly as I want them, you shouldn’t have to change print_hedz at all.

These 3 mini functions can be done in any order. As you get experienced, I recommend going from smaller to big. But if you’re new, sometimes it’s easier to start with the big, most complicated functionality, because you’re typically used to thinking in big steps when using your computer normally.

For each function, start out in interactive Python (i.e. iPython) mode to test everything out. Once you know what you need to do, then add code into your scraper.py file.

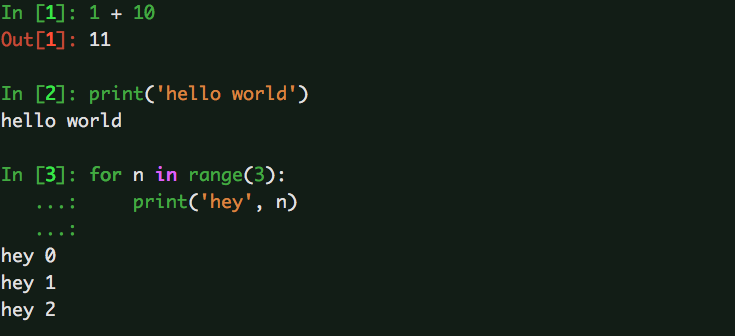

A note about iPython notation¶

For this guide, I’ll be using the following prompt convention to indicate an “interactive Python prompt”, and the value that’s printed when hitting Return:

>>>> 1 + 10

11

>>>> print('hello world')

hello world

>>>> for n in range(3):

print('hey', n)

hey 0

hey 1

hey 2

If you are using iPython, your Terminal/shell will look something like this:

Writing fetch_html() with the Requests library¶

The fetch_html function “simply” takes a URL argument and gets the data/content/text at that URL. However, any step that involves connecting to the Internet is inextricably a complicated step.

Biggest error: Not checking to see if your web/Internet connection works. Open up a website using your browser before going into Ipython.

You can read a bit about the Requests library in this basic tutorial. The key thing is to realize that it’s a third-party library (one that should have come pre-installed with Anaconda), and that we use the import statement to bring it in.

>>>> import requests

The requests module has a get method, but use your Tab key to get a list of all possible methods, just to see what’s there.

Before we try to download a page, let’s choose a page with the simplest HTML possible – use your browser to inspect the HTML so you know what to expect:

https://compciv.github.io/stash/hello.html

Now use requests.get():

>>>> requests.get('https://compciv.github.io/stash/hello.html')

<Response [200]>

What is that <Response [200]> object that is return by requests.get? It doesn’t look like any of the Python built-in object types (e.g. string, number, lists, etc). In order to inspect that return object, we have to “capture” it – easiest way to do so is with variable assignment:

>>>> thing = requests.get('https://compciv.github.io/stash/hello.html')

>>>> type(thing)

requests.models.Response

OK, that’s not much more help, though at least we can Google what that object is. Type thing. (or whatever you named your variable) and hit the Tab key to get a list of that object’s attributes/properties/methods.

Some methods/attributes that we will find quite useful:

>>>> thing = requests.get('https://compciv.github.io/stash/hello.html')

>>>> thing.status_code

200

>>>> thing.url

'https://compciv.github.io/stash/hello.html'

>>>> thing.text

'<h1>Hello, world!</h1>\n'

>>>> thing.content

b'<h1>Hello, world!</h1>\n'

>>>> thing.headers

{'Server': 'GitHub.com', 'Content-Type': 'text/html; charset=utf-8'} # and more stuff...

Seems that the text attribute contains what we want – the text content of the webpage at the given URL. If we were to start from the beginning:

import requests

url = 'https://compciv.github.io/stash/hello.html'

resp = requests.get(url)

print(resp.text)

But keep in mind that we do not want this functionality to print its text content. We want a function that returns the text content of the webpage.

Writing parse_headline_tags() as a sloppy way to parse HTML¶

When you learn what HTML actually is, and how to actually parse it like a sane person, you’ll see this exercise for being pretty dumb. But, it’s not a bad way to think of the basic problem of wrangling raw data to get what we want.

The parse_headline_tags function is meant to take one argument – a string. In the context of our program, this string is the entire HTML of a given webpage, i.e. the return value of fetch_html.

As defined in the requirements, in needs to do something with this giant string – basically, return a list of strings, with each string looking like what a Stanford news headline looks like.

Given the HTML on this page:

Here is the HTML text that corresponds to the headlines:

<h3><a href="https://news.stanford.edu/2018/01/16/window-long-range-planning/">A window into long-range planning</a></h3>

<h3><a href="https://news.stanford.edu/2018/01/12/3-d-images-artifacts-enrich-experience-students-faculty/">3-D images of artifacts enrich experience for students, faculty</a></h3>

Note that parse_headline_tags does not have the job of getting the actual headline text, i.e. “A window into long-range planning” – that’s what extract_headline_text will do.

The trick to this function is understanding the split() and splitlines() method works for string objects. As always, open up iPython and write some test examples:

>>>> mystring = 'dan is awesome'

>>>> wat = mystring.split(' ')

>>>> type(wat)

list

>>>> len(wat)

3

>>>> for w in wat:

print(w.upper())

'DAN'

'IS'

'AWESOME'

The splitlines() method is basically split() (I’m going to ignore the complexity of newline characters for now).

Try creating a multi-line string in Python by using triple-quotes as the delimiter:

>>>> mystring = """Dan

is

awesome

"""

>>>> lines = mystring.splitlines()

I’ll let you inspect/test that on your own (i.e. what type of thing is referred to in the lines variable?)

Now, think of how to use a conditional state and the in operator to test each line for a condition:

>>>> mystring = """dan

eats

bugs

"""

>>>> lines = mystring.splitlines()

>>>> for line in lines:

if 's' in line:

print('hey', line)

hey eats

hey bugs

The final part of this – how do we add things to a list?

Again, start simple:

>>>> mylist = []

>>>> mylist.append('doe')

>>>> mylist.append('ray')

>>>> mylist.append('mei')

>>>> len(mylist)

3

How to put it all together: instead of a for-loop that prints 'hey', line – have the for-loop append line to a list, and then return that list.

Writing extract_headline_text() to do even more sloppy HTML extraction¶

The trick to this function is to think small. Think of all the other work done by the other functions.

Think what is left to be done. Think of what the stated requirements are for this function:

- It accepts a string as an argument

- It returns a string as an argument

The parse_headline_tags function returns a list of strings. And what do each of those strings look like?

Like this:

'<h3><a href="https://news.stanford.edu/2018/01/16/window-long-range-planning/">A window into long-range planning</a></h3>'

What is the “old-fashioned” algorithm – i.e. how you would describe it to a human – for extracting the headline from the HTML above?

Think about it in the most basic way.

Then. consider the string’s split() method again, how it works, and what it returns. Note how I use it to extract 'awesome' from the string below:

>>>> mystr = 'dan>is>awesome<dude'

>>>> xlist = mystr.split('>')

>>>> xlist

['dan', 'is', 'awesome<dude']

>>>> xlist[2]

'awesome<dude'

>>>> xlist[2].split('<')

['awesome', 'dude']

>>>> xlist[2].split('<')[0]

'awesome'